An Amazon Alexa Skill Using AWS Lambda and the Strava API

TL;DR: I built an Amazon Alexa voice skill on AWS Lambda using the Strava API (and OAuth)

I got an Amazon Echo as a Christmas present (to myself…) and have been really impressed with it. I expected it to be a geek toy that nobody else in the house would use but it gets used a lot by other family members for things like spotify and interactive games (my 4 year old likes to try and beat Alexa at 20 questions!). I think the reason that it has gained such traction in our home is down to the quality of the voice interaction.

I wanted to see how difficult it would be it write an app (Skill) for Alexa. I use Strava a lot so I decided to see how difficult it would be to get a Skill to consume the API. I’ve outlined the steps in setting up the Skill and some of the code used to query the API.

Before I get started, this is an example of the response from the skill:

Disclaimer

There’s going to be lots of screenshots (boo) of the AWS consoles for the first half of this post. The code (yay) is towards the end.

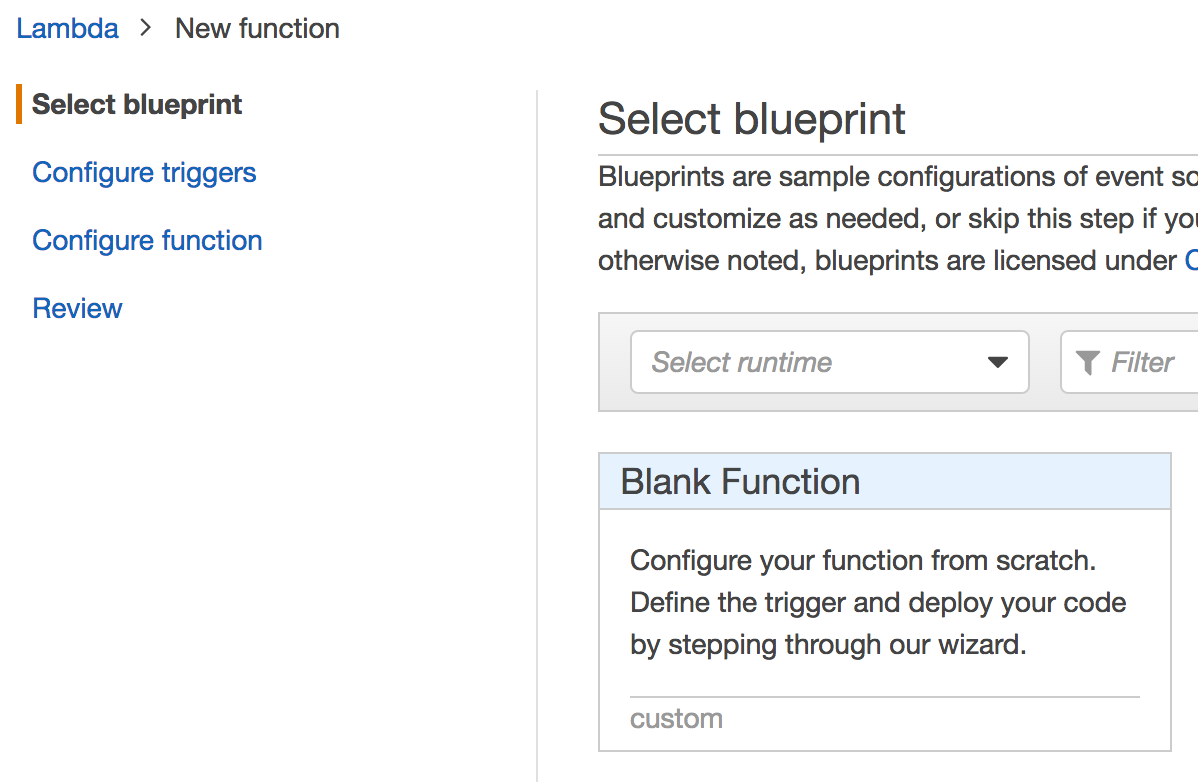

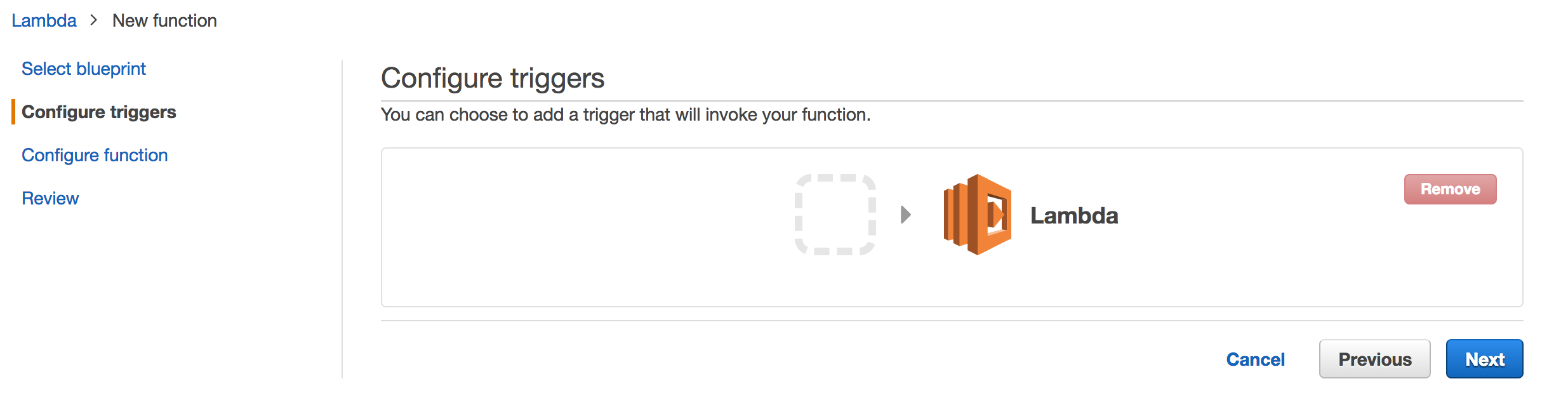

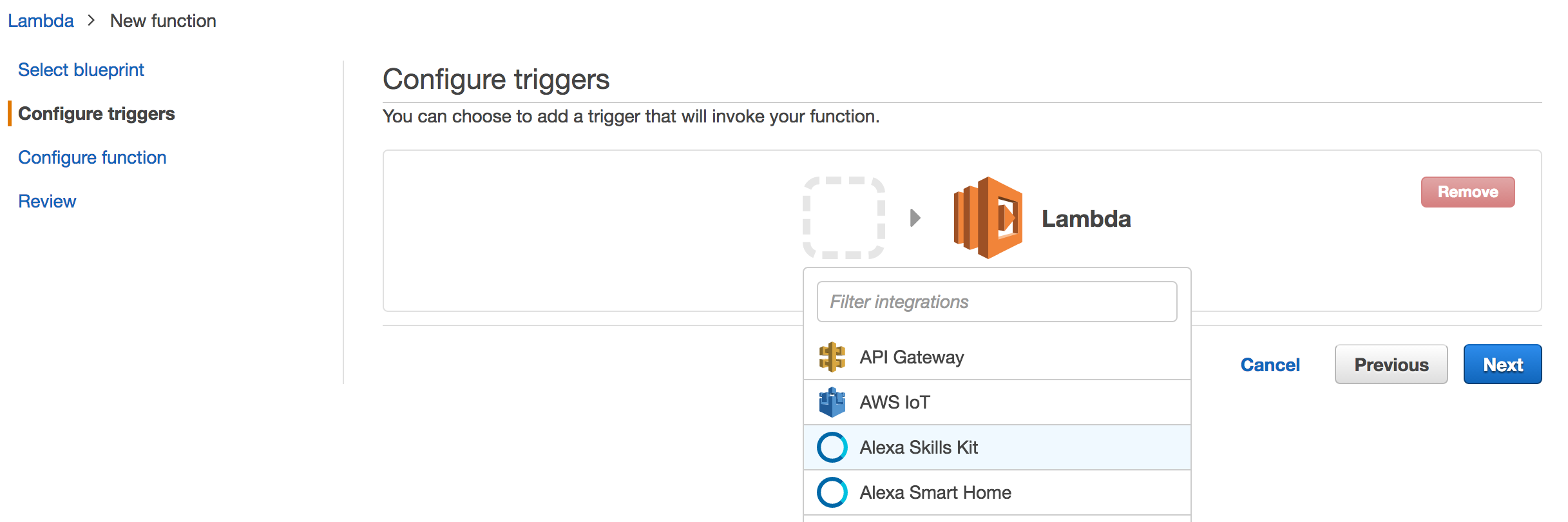

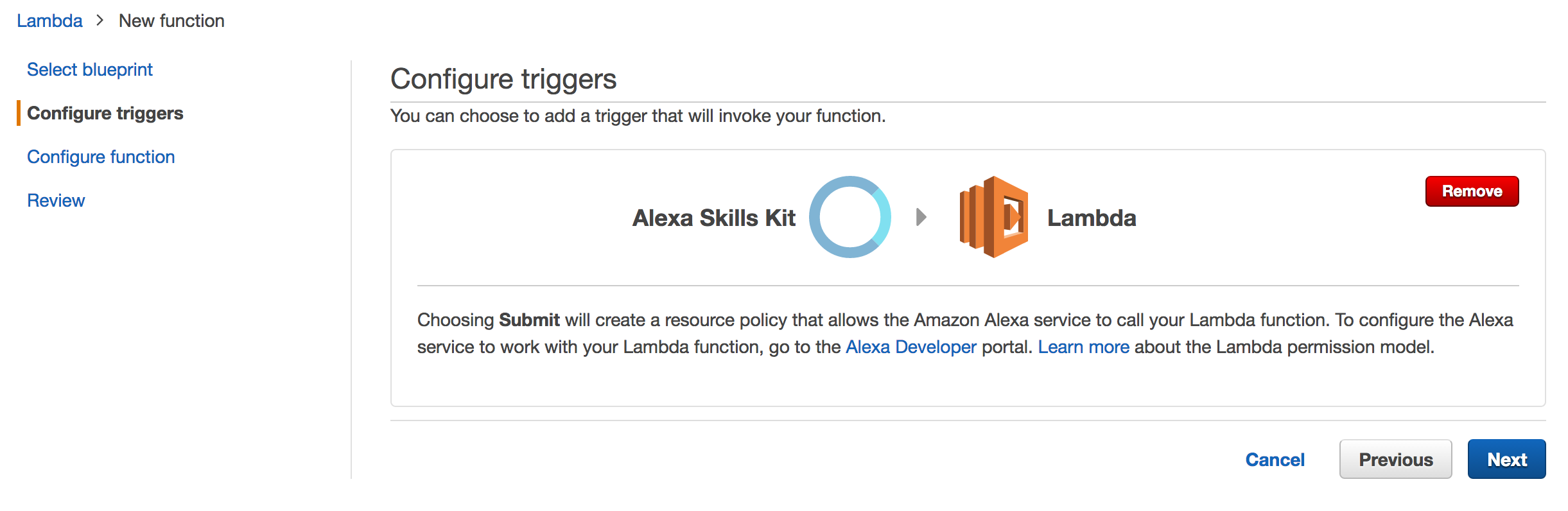

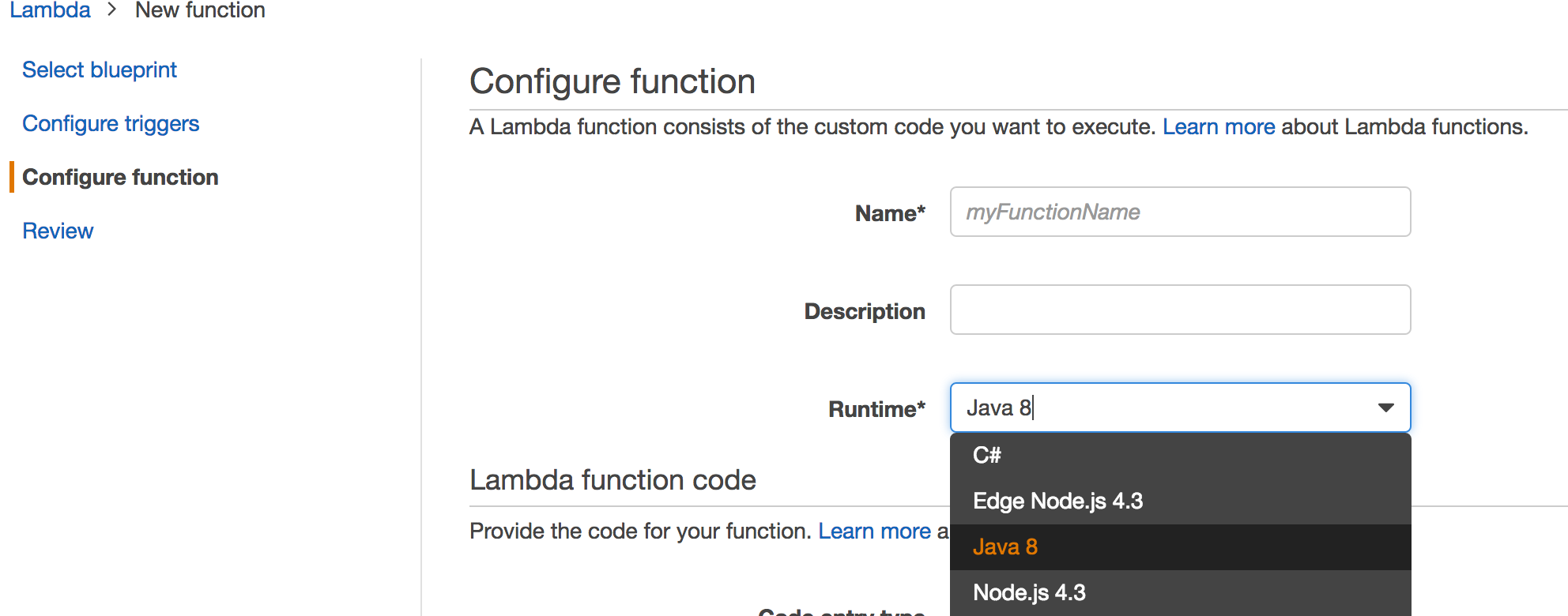

AWS Lambda

The first step is to create a Lambda function that will host the endpoint for the Skill.

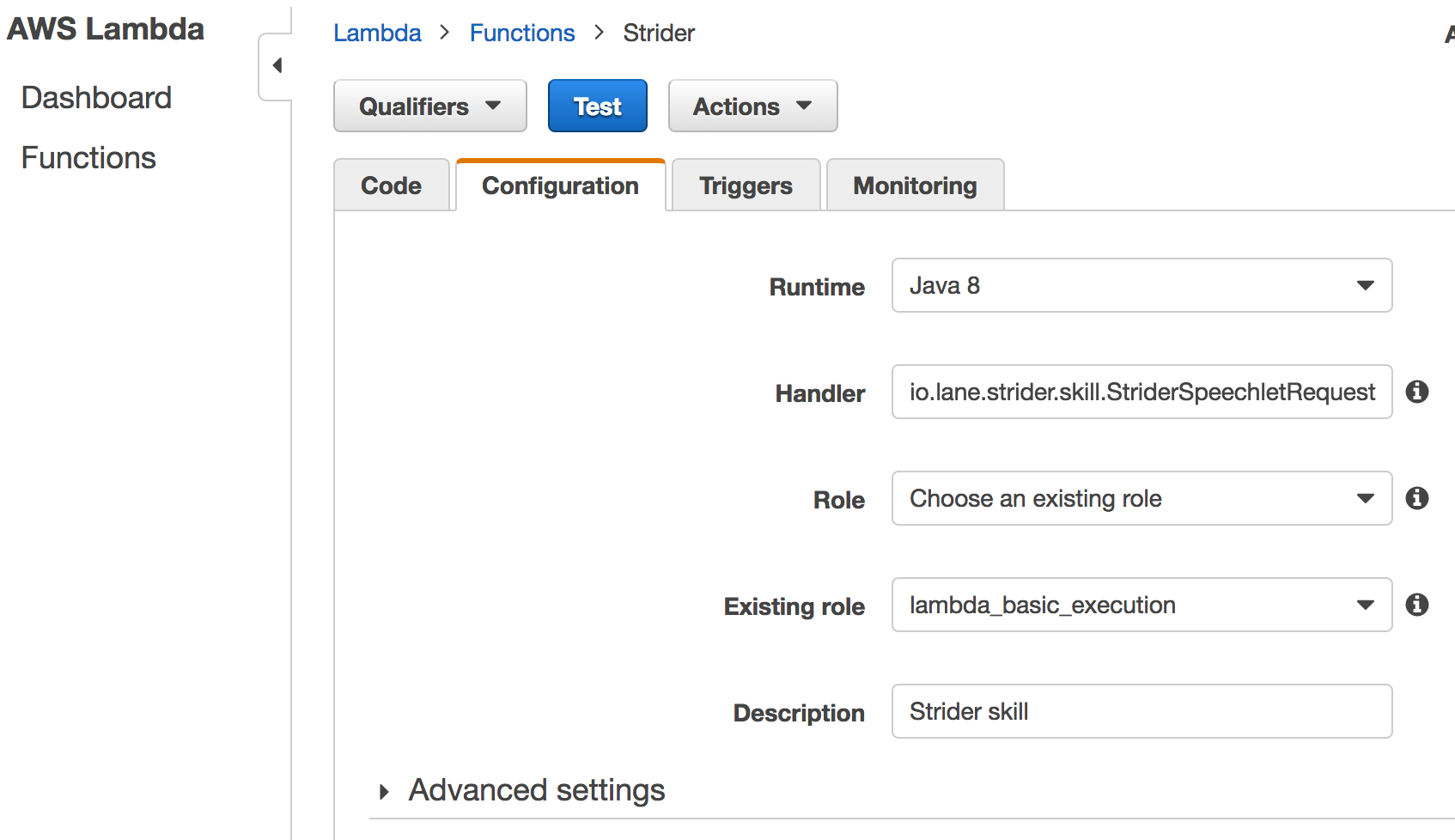

Skill Configuration

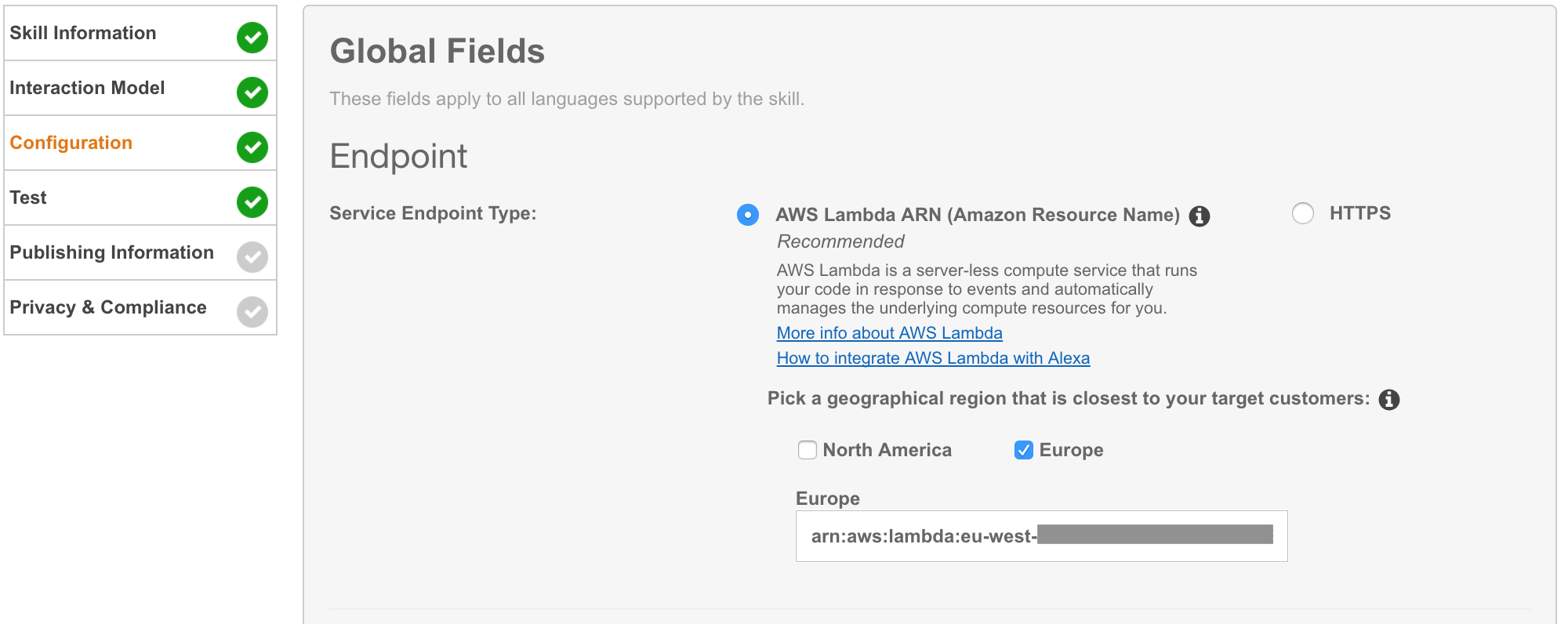

Once the skill is set up and you have an Amazon Resource Name (ARN) to reference your Lambda function, you can set up your Skill to point at it.

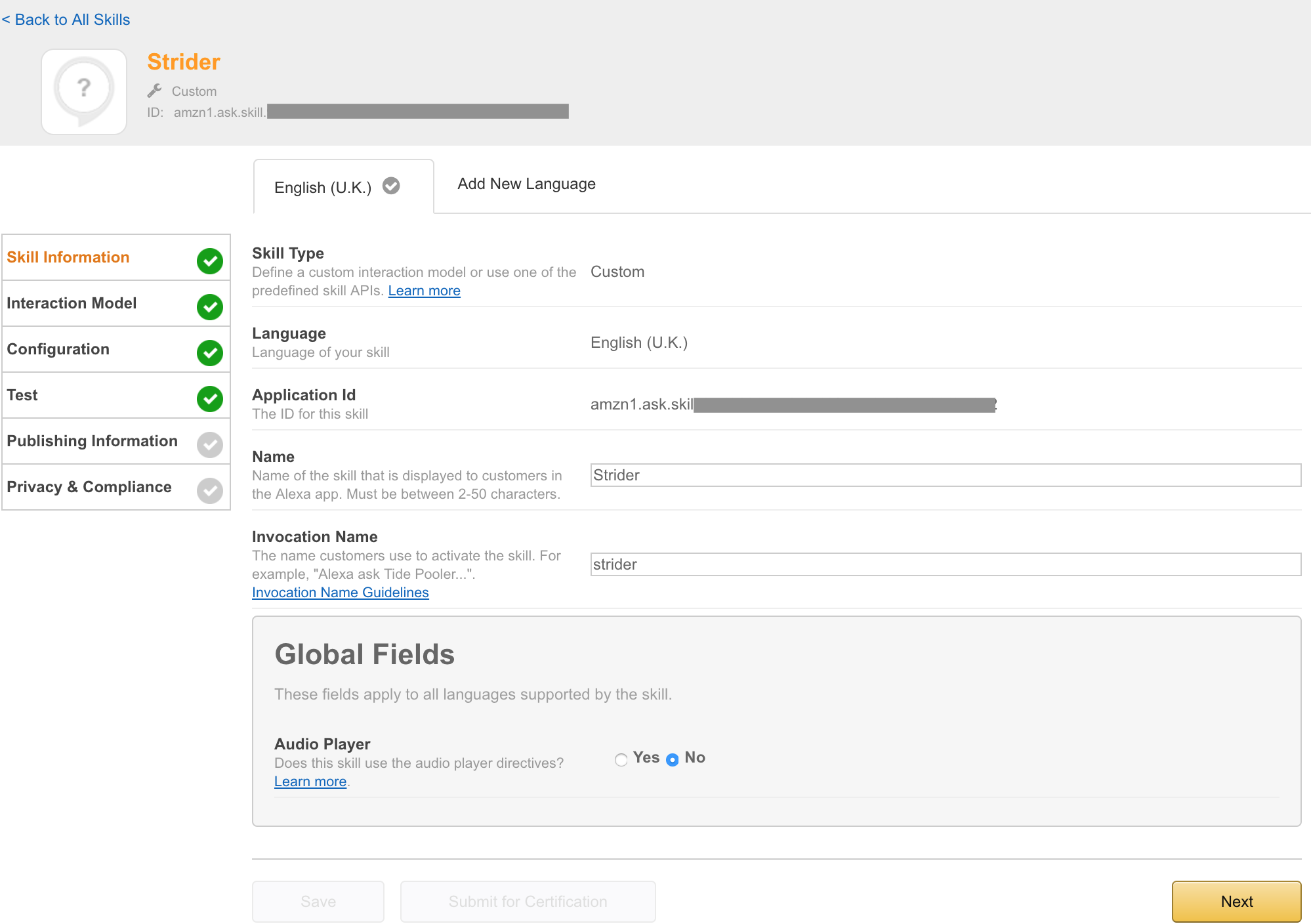

You create a Skill from the developer.amazon.com portal

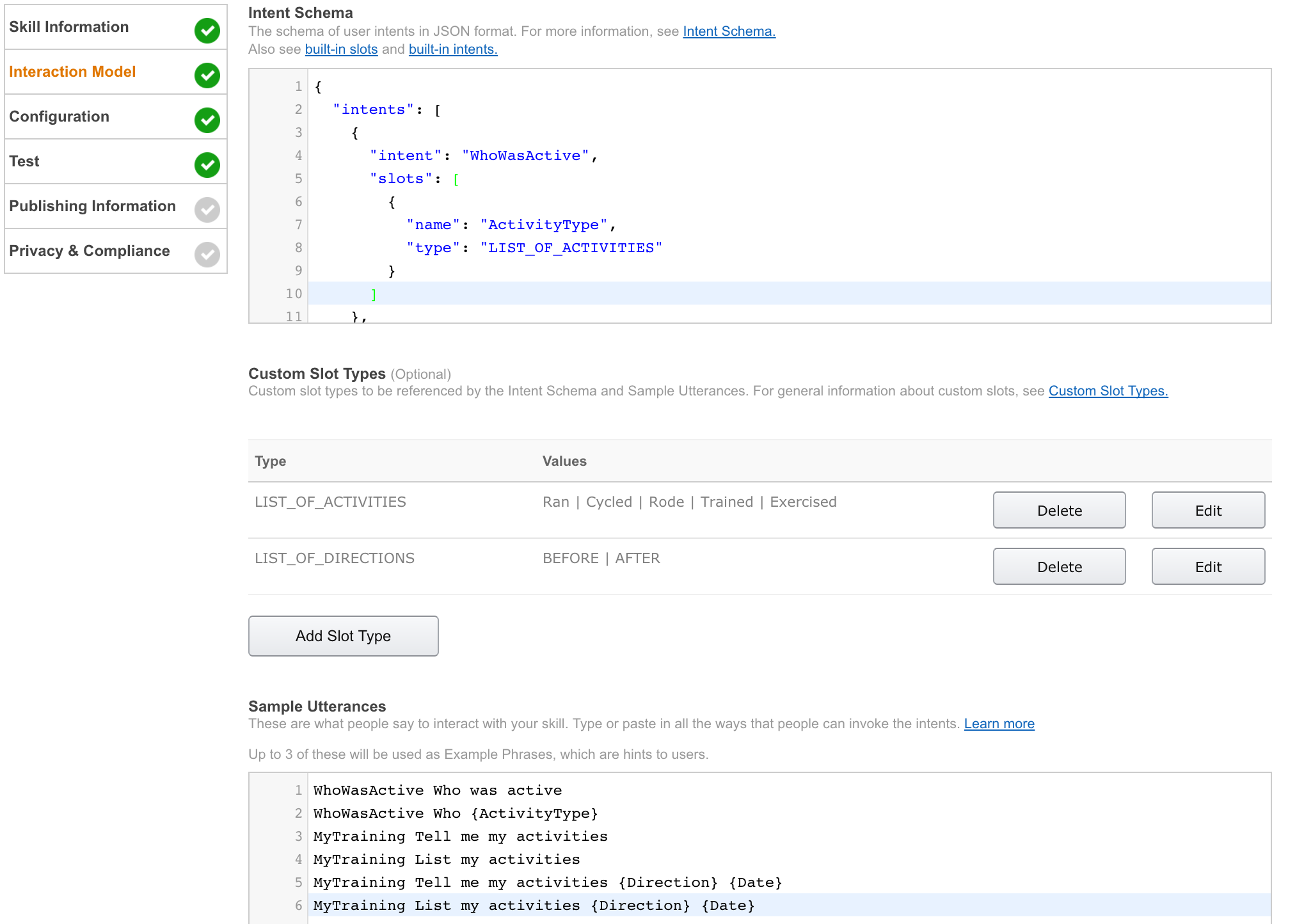

The interaction model is made up of Intents, Slots and Utterances.

I defined 2 intents, one to allow a user to query their own activities and the other to query their friends activities.

{

"intents": [

{

"slots": [

{

"name": "ActivityType",

"type": "LIST_OF_ACTIVITIES"

}

],

"intent": "WhoWasActive"

},

{

"slots": [

{

"name": "Direction",

"type": "LIST_OF_DIRECTIONS"

},

{

"name": "Date",

"type": "AMAZON.DATE"

}

],

"intent": "MyTraining"

}

]

}Slots are like variables that Amazon extracts from the users request based on how the user invokes the intent.

There are built in slots like AMAZON.DATE and you can create custom slots too, LIST_OF_ACTIVITIES is a custom slot I defined.

The Sample Utterances are where you list the ways you expect users to interact with your skill. You associate utterances with Intents in this section aswell as adding your slots as tokens.

For example, if a user spoke Who Ran or Who Cycyled they would both match the utterance WhoWasActive Who {ActivityType}. The Intent that would be requested form your endpoint would be WhoWasActive and the Slot would contain an ActivityType of Ran or Cycled.

For more info see the docs

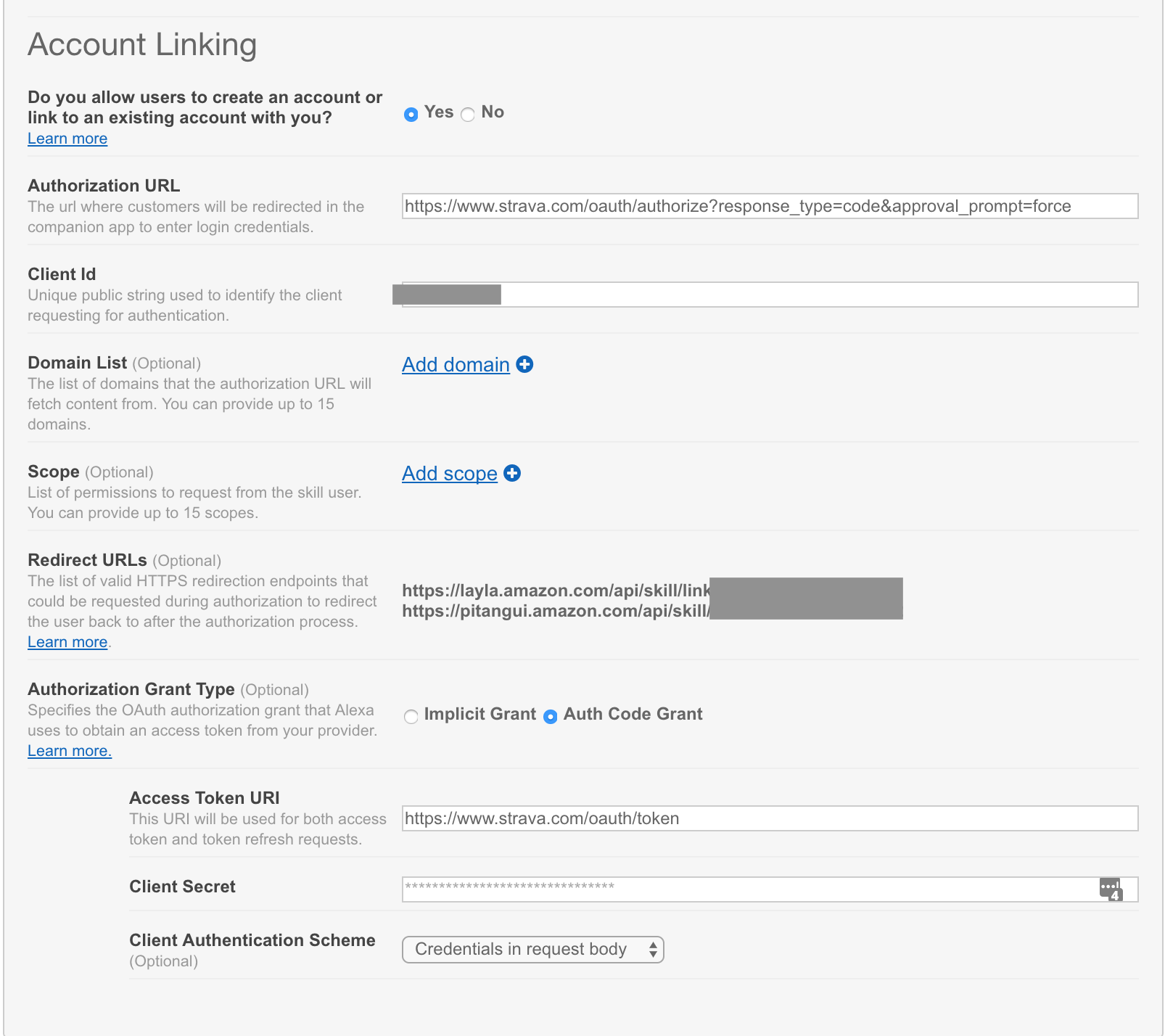

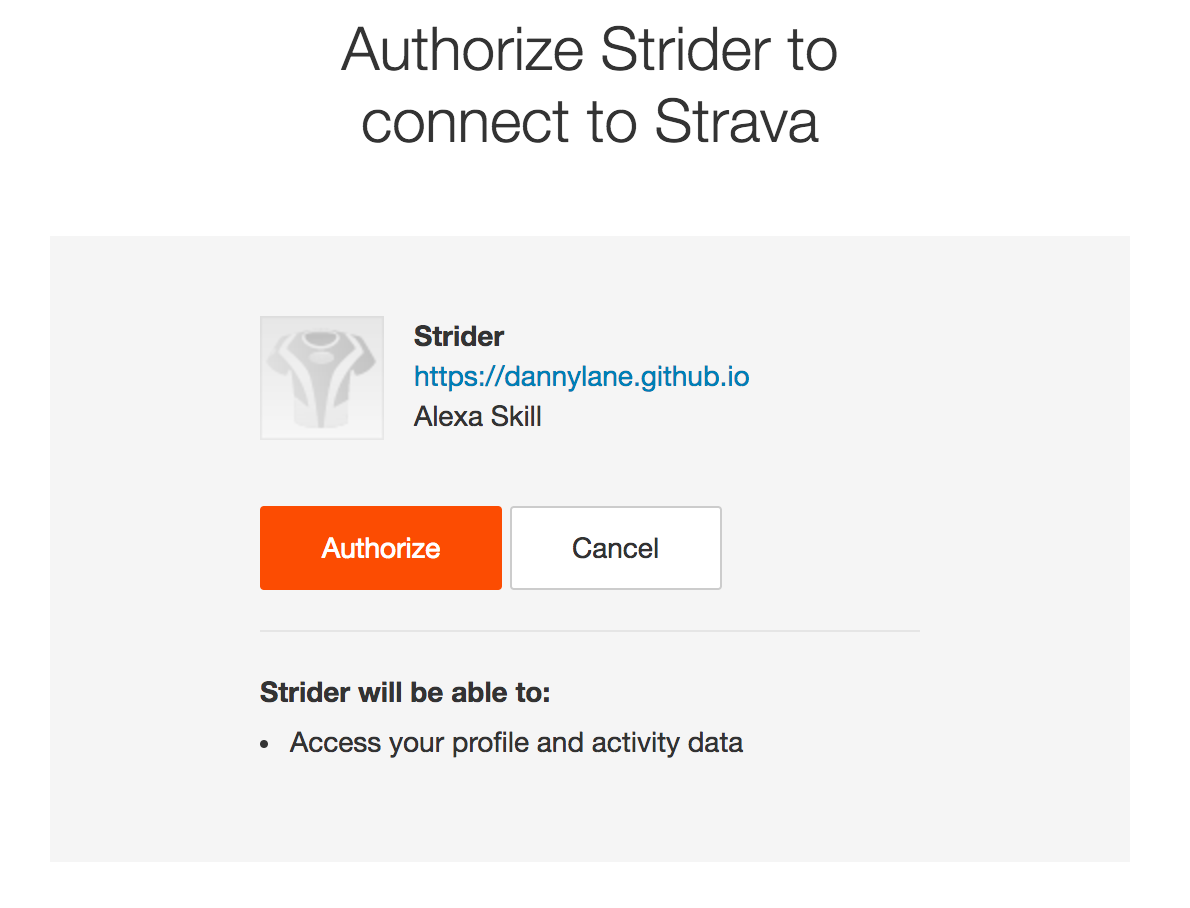

Making the skill work with the Strava OAuth took a while to configure correctly but didn’t require any code which was nice. Once this config is setup users get prompted to authorise the app from their Strava account

Once the user authorizes the skill, Amazon takes care of requesting and refreshing the auth token. The token is supplied to your request handler with every invocation of the skill and you pass the token through to the API call. Painless.

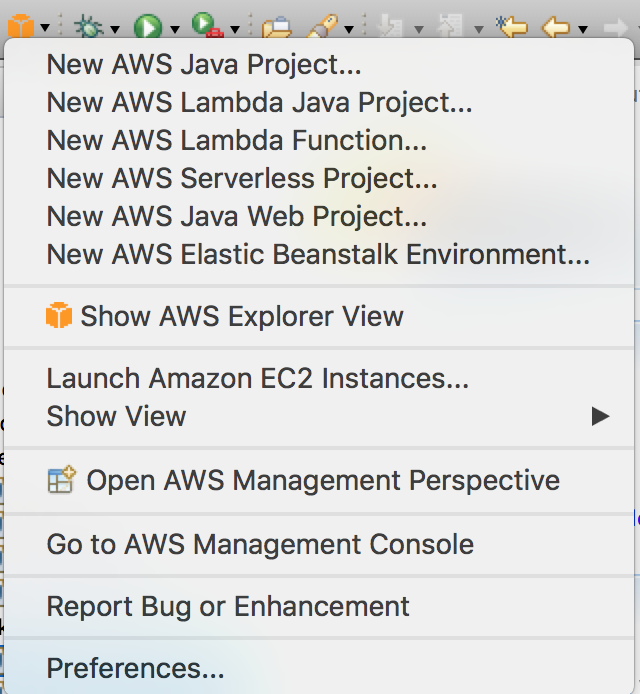

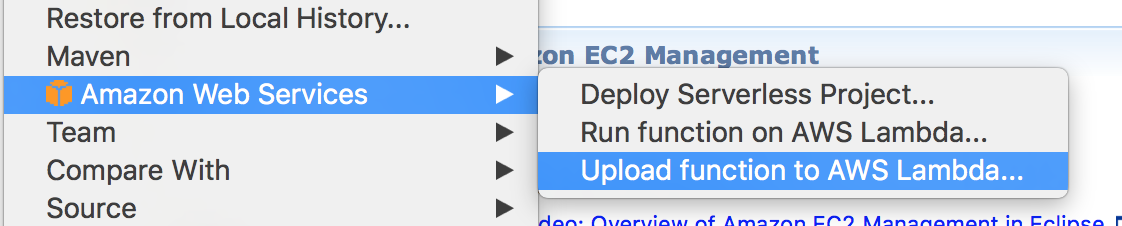

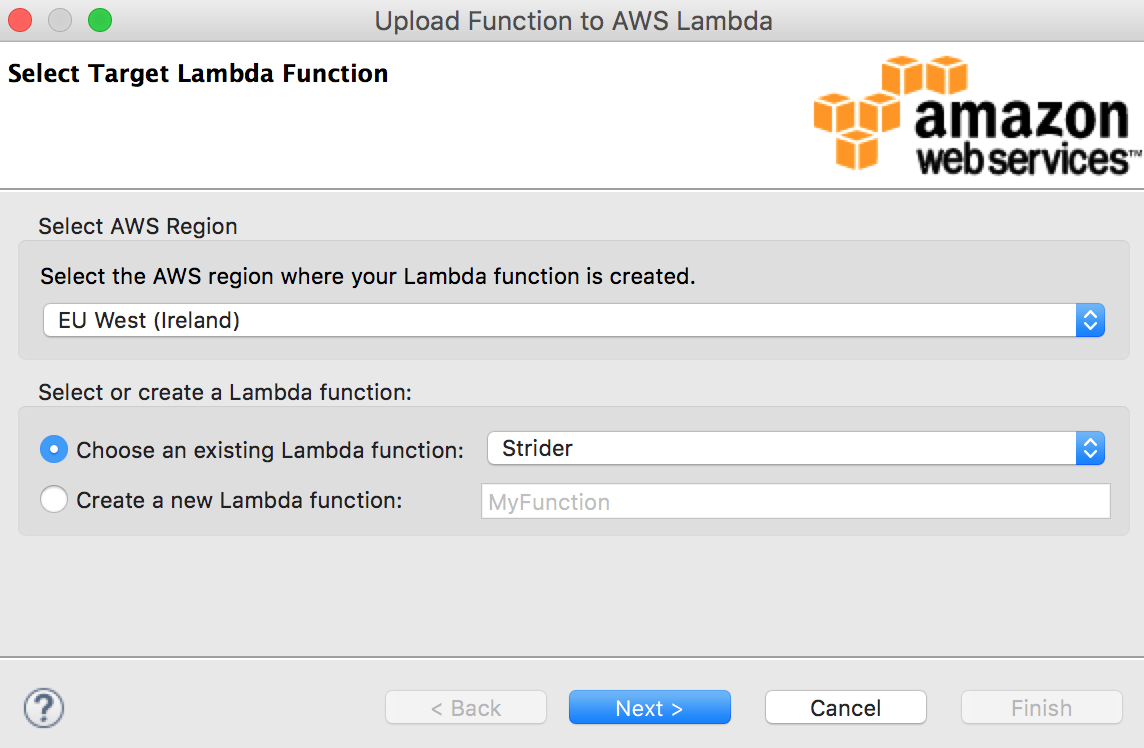

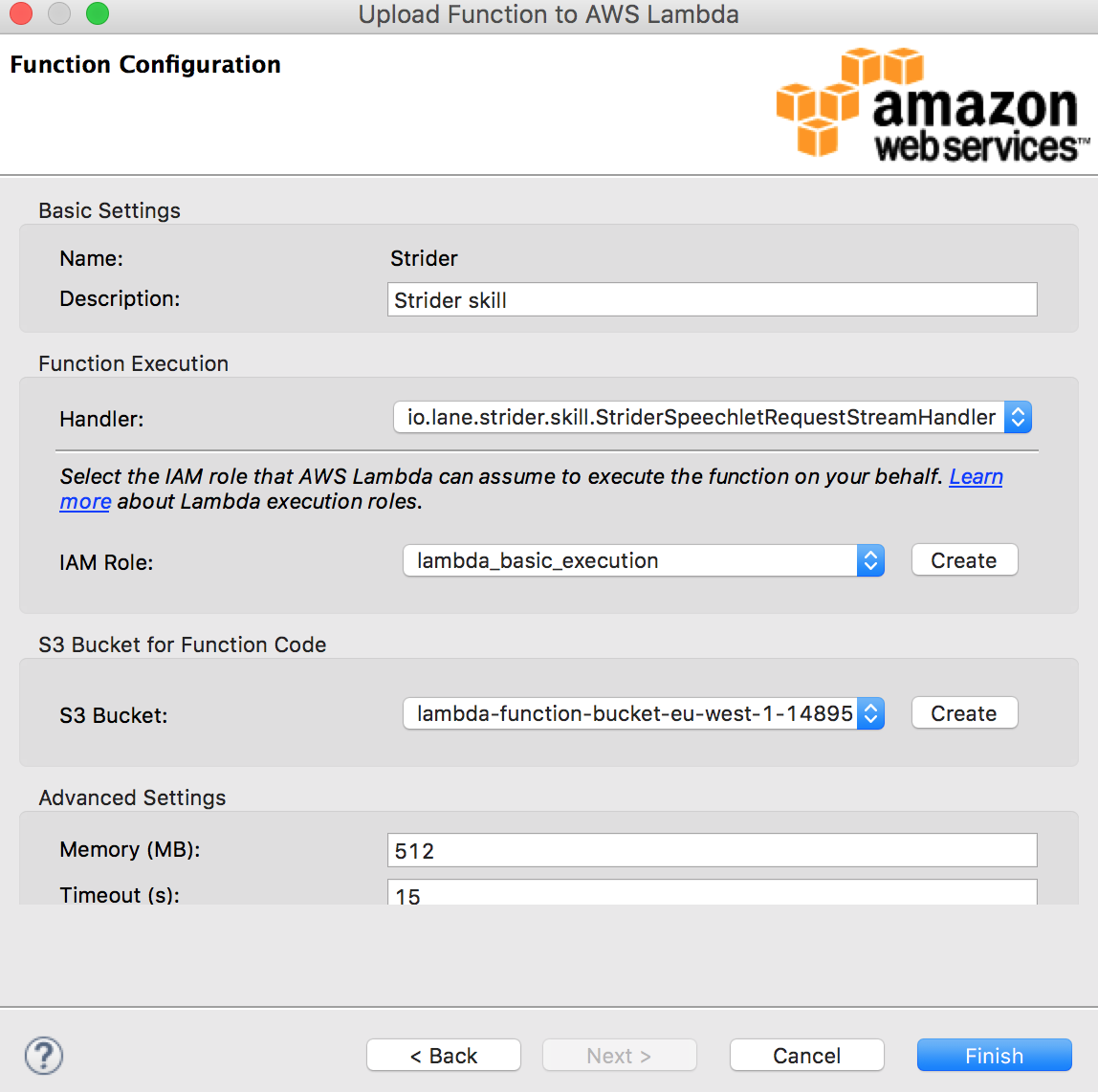

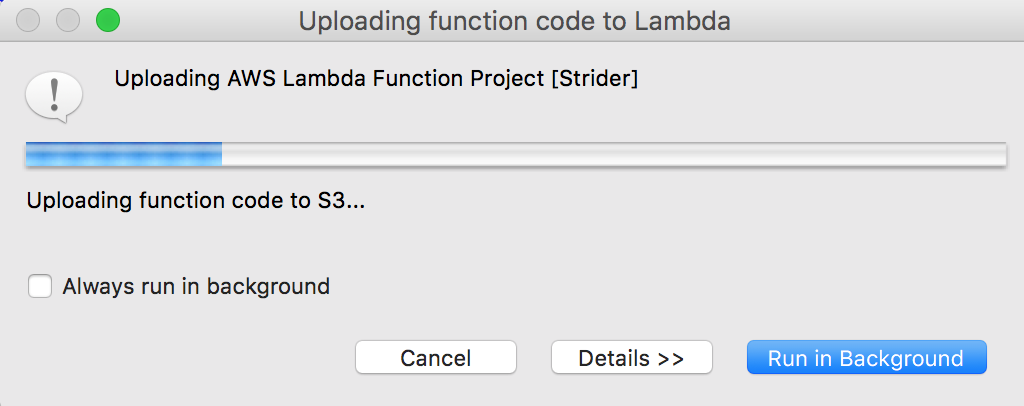

AWS Eclipse Plugin

There is an AWS Plugin for Eclipse available here.

Code

I used the alexa-skills-kit-java library from Amazon for taking care of a lot of the boilerplate.

The entry point to the code is the RequestStreamHandler, this is the handler defined in the Skill config:

public class StriderSpeechletRequestStreamHandler extends SpeechletRequestStreamHandler {

private static final Set<String> supportedApplicationIds = new HashSet<String>();

static {

/*

* This Id can be found on https://developer.amazon.com/edw/home.html#/

* "Edit" the relevant Alexa Skill and put the relevant Application Ids

* in this Set.

*/

supportedApplicationIds.add("amzn1.ask.skill.<snip>");

}

public StriderSpeechletRequestStreamHandler() {

super(new StriderSpeechlet(), supportedApplicationIds);

}

}The StriderSpeechlet implements Speechlet class is where you handle events like onLaunch, onIntent, onSessionEnded, etc.

@Override

public SpeechletResponse onIntent(final IntentRequest request, final Session session) throws SpeechletException {

IExecuteQuery query = null;

Intent intent = request.getIntent();

String intentName = (intent != null) ? intent.getName() : null;

String intentDate = getSlotValue(intent, "Date");

String intentDirection = getSlotValue(intent, "Direction");

String token = session.getUser().getAccessToken();

try {

if ("WhoWasActive".equals(intentName)) {

query = new FollowerQuery(token, intentDate);

} else if ("MyTraining".equals(intentName)) {

query = new SelfQuery(token, intentDirection, intentDate);

} else if ("AMAZON.HelpIntent".equals(intentName)) {

// TODO help me mario !!!

} else {

throw new SpeechletException("Invalid Intent");

}

} catch (Exception e) {

throw new SpeechletException(e.getMessage());

}

SsmlOutputSpeech speech = new SsmlOutputSpeech();

speech.setSsml(query.Execute().get(0));

return SpeechletResponse.newTellResponse(speech);

}Strava Queries

Strava exposes a RESTful API where a user, once authenticated, can query info about their activities, friends activities, segments, etc. The docs for the API are here.

{

"id": 321934,

"athlete": {

"id": 227615,

"resource_state": 1

},

"name": "Evening Ride",

"description": "the best ride ever",

"distance": 4475.4,

"moving_time": 1303,

"elapsed_time": 1333,

"total_elevation_gain": 154.5,

"elev_high": 331.4,

"elev_low": 276.1,

"type": "Run",

"start_date": "2012-12-13T03:43:19Z",

"start_date_local": "2012-12-12T19:43:19Z",

"start_latlng": [ 37.8, -122.27],

"end_latlng": [37.8, -122.27],

"photos": {},

"map": {},

"average_speed": 3.4,

"max_speed": 4.514,

"segment_efforts": [],

"laps": []

}These are the endpoints I was most interested in:

https://www.strava.com/api/v3/athlete/activities - Logged in users activities

https://www.strava.com/api/v3/activities/following - Friends activities

https://www.strava.com/api/v3/athletes/:id - Used for backfilling the athlete name for friends activities

I used the JStrava library for querying the Strava REST API.

public List<String> Execute() {

List<Activity> activities;

if ("before".equalsIgnoreCase(_direction)) {

activities = strava.getCurrentAthleteActivitiesBeforeDate(_date.getMillis());

} else if ("after".equalsIgnoreCase(_direction)) {

activities = strava.getCurrentAthleteActivitiesAfterDate(_date.getMillis());

} else {

activities = strava.getCurrentAthleteActivities();

}

int max = 5;

if (activities.size() < 5) {

max = activities.size();

}

return ParseResults(activities.subList(0, max));

} public List<String> Execute() {

List<Activity> activities = strava.getCurrentFriendsActivities();

int max = 5;

if (activities.size() < 5) {

max = activities.size();

}

return ParseResults(activities.subList(0, max));

} private List<Activity> FillAthleteInfo(List<Activity> activities) {

Map<Integer, Athlete> athletes = new HashMap<Integer, Athlete>();

int currentId;

Athlete athlete;

for (Activity activity : activities) {

currentId = activity.getAthlete().getId();

if (!athletes.containsKey(currentId)) {

athlete = strava.findAthlete(currentId);

athletes.put(currentId, athlete);

} else {

athlete = athletes.get(currentId);

}

activity.setAthlete(athlete);

}

return activities;

} private String responseFormat = "%1$s %2$s <say-as interpret-as=\"unit\">%3$.1fkm</say-as> "

+ "in <say-as interpret-as=\"unit\">%4$dhours</say-as>"

+ "<say-as interpret-as=\"unit\">%5$dminutes</say-as>";

private String FormatActivity(Activity activity) {

double km = activity.getDistance() / 1000;

int hours = activity.getElapsed_time() / 3600;

int minutes = (activity.getElapsed_time() % 3600) / 60;

return String.format(responseFormat, activity.getAthlete().toString(), activity.getType(), km, hours, minutes);

}Yes, I was being lazy and inserted the type into the response directly, so run is spoken instead of the past tense.

Speech Synthesis Markup Language

Alexa alows you to tailor how the response is delivered (spoken) to the user using SSML

"outputSpeech": {

"type": "SSML",

"ssml": "Danny Lane Run <say-as interpret-as=\"unit\">16.2km</say-as> in <say-as interpret-as=\"unit\">1hours</say-as><say-as interpret-as=\"unit\">8minutes</say-as>"

},Result

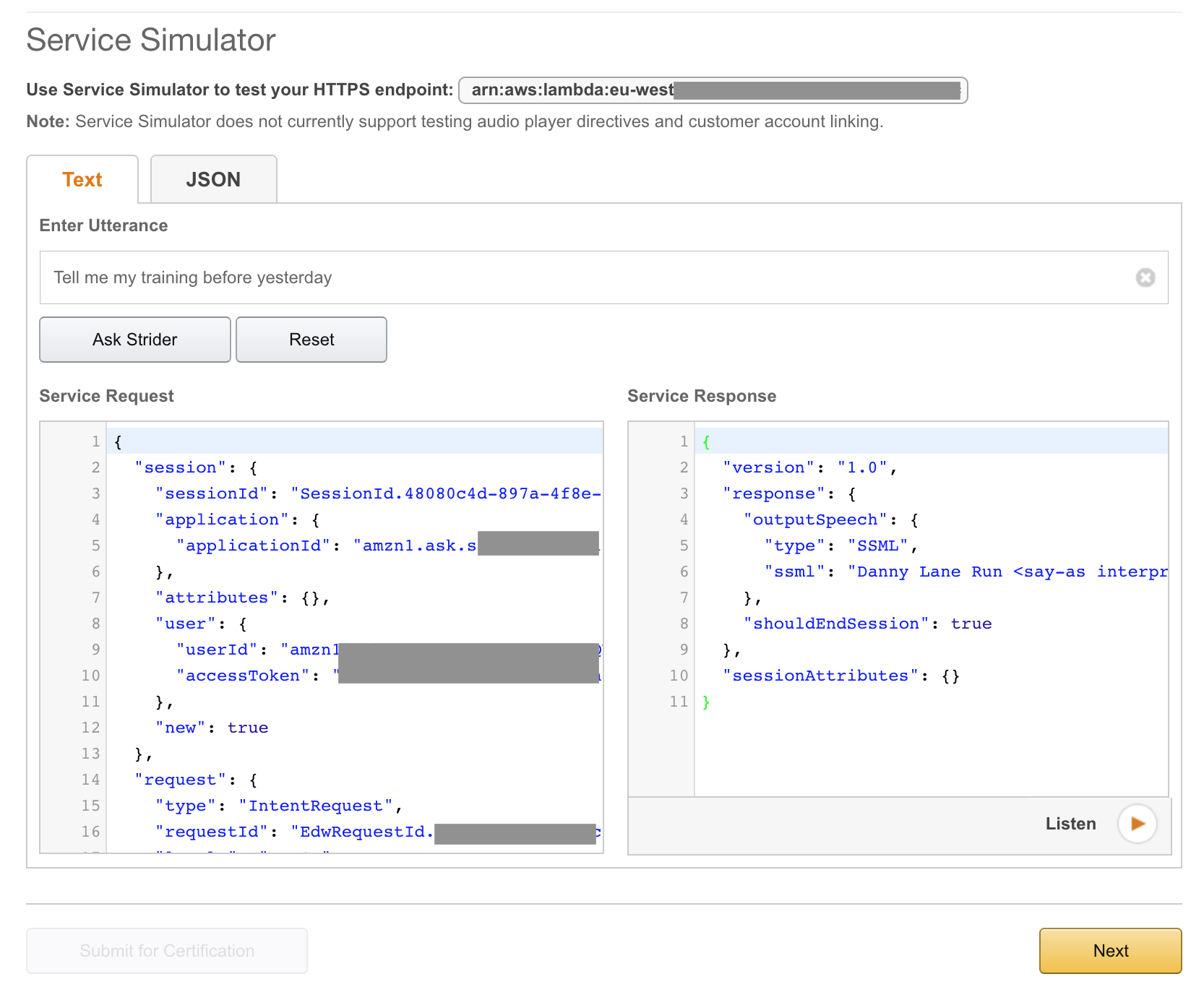

There code above is all very agricultural but it’s enough to get a response from Alexa. To test the Skill you can input an utterance from the test page and see the request that goes to your app (see the session token and how the slots/intent are broken up) and you can see the response also.

The request/response for the request to 'ask Strider Tell me my activities yesterday' is below:

{

"session": {

"sessionId": "SessionId.<snip>",

"application": {

"applicationId": "amzn1.ask.skill.<snip>"

},

"attributes": {},

"user": {

"userId": "amzn1.ask.account.<snip>",

"accessToken": "<snip>"

},

"new": true

},

"request": {

"type": "IntentRequest",

"requestId": "EdwRequestId.<snip>",

"locale": "en-GB",

"timestamp": "2017-03-27T20:32:51Z",

"intent": {

"name": "MyTraining",

"slots": {

"Direction": {

"name": "Direction",

"value": "before"

},

"Date": {

"name": "Date",

"value": "2017-03-26"

}

}

}

},

"version": "1.0"

}{

"version": "1.0",

"response": {

"outputSpeech": {

"type": "SSML",

"ssml": "Danny Lane Run <say-as interpret-as=\"unit\">16.2km</say-as> in <say-as interpret-as=\"unit\">1hours</say-as><say-as interpret-as=\"unit\">8minutes</say-as>"

},

"shouldEndSession": true

},

"sessionAttributes": {}

}The audio file that Alexa generates for the SSML above is the one I linked to at the top of the post:

Friend Query

"intent": {

"name": "WhoWasActive",

"slots": {

"ActivityType": {

"name": "ActivityType"

}

}

} "response": {

"outputSpeech": {

"type": "SSML",

"ssml": "Brian McCarthy Ride <say-as interpret-as=\"unit\">31.0km</say-as> in <say-as interpret-as=\"unit\">1hours</say-as><say-as interpret-as=\"unit\">0minutes</say-as>"

},

"shouldEndSession": true

}The SSML for the response above sounds like this:

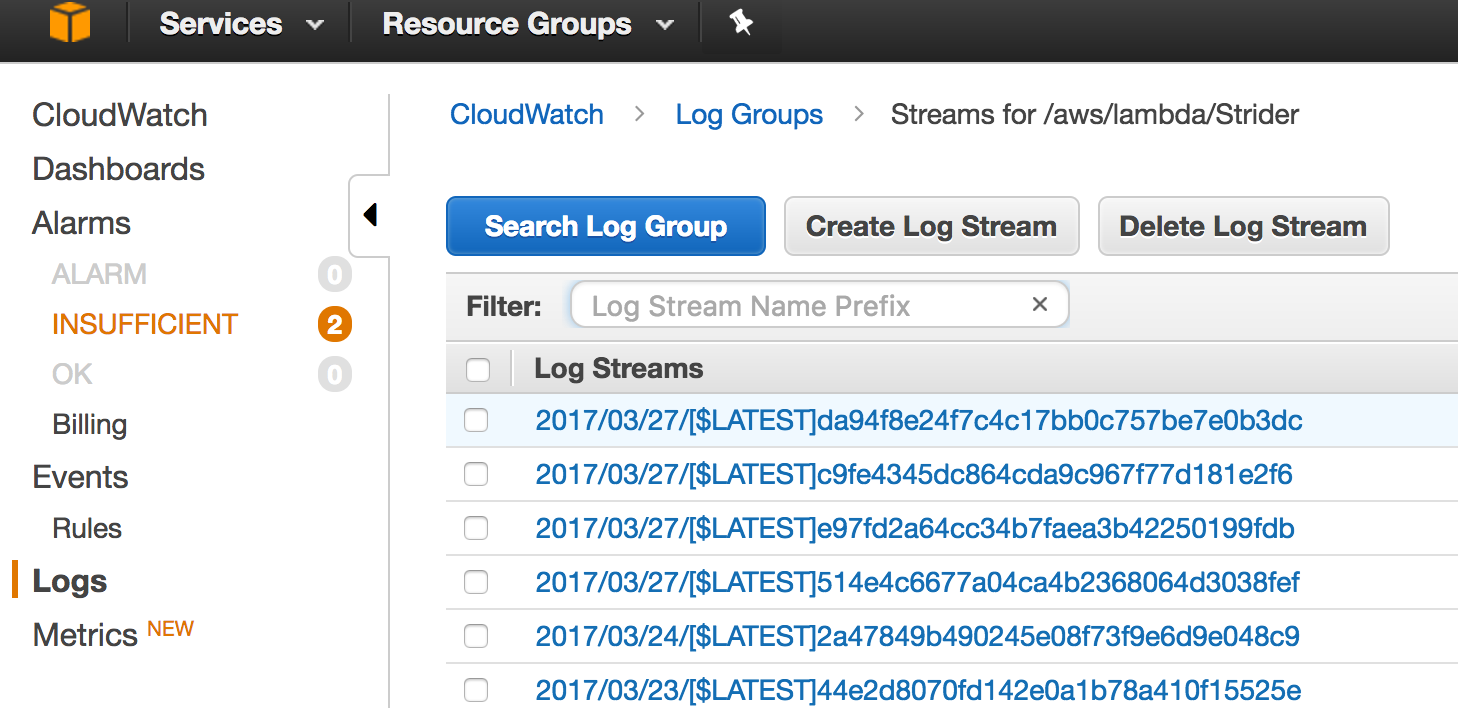

Logging

LambdaLogger, the logging output is available in AWS CloudWatch

Submission

There is a rigerous approval process in place for Alexa Skills, I would need to improve a lot of areas of the code to make it robust enough to handle any level of testing so this may never see the light of day!

Thanks for reading.