Azure Functions with ServiceBus and Blob Storage

Serverless technologies are an area of cloud computing that I find very interesting, particularly the Function As A Service paradigm.

Products like AWS Lambda and Azure Functions have been around for a number of years now, and have had some pretty compelling use cases from the outset.

Everything from on prem cron jobs that need servers to live on, up to entire restful APIs are commonly finding new homes on these FAAS platforms.

Seeing features like Jobs and CronJobs in Kubernetes further backs up the feeling that that these 'write once, run occasionally' frameworks are have touched upon a genuine need in the industry and are not just an interesting technical solution looking for a problem.

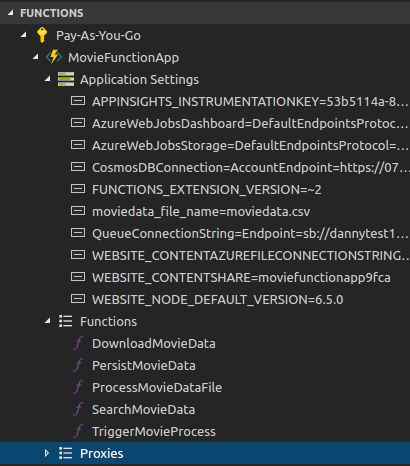

I set up a pretty trivial data ingest pipeline to try out some of the different ways that Azure Functions interacts with some of the other cloud primitives offered by Microsoft.

The pipeline is made up of a number of azure functions, and some of these functions interact with Azure Service Bus, Azure Blob Storage, Azure CosmosDB and plain old HTTP.

At a high level the pipeline has a trigger that initiates the flow with a Service Bus event. The second step downloads a csv with data on 5,000 movies and puts it to blob storage. In step 3 that csv is parsed and the data fired for as individual messages into another Service Bus queue. The last step in the pipeline ingests those messages with movie data and inserts them into a CosmosDB instance.

There is a 5th function that is exposed as a simple search API and is integrated with a static site served by Azure CDN.

In this first article I’m going to go through the first 2 steps, triggering the data flow and getting the CSV into Blob Storage.

Bindings

Defore I get into the code I should call out the bindings and triggers that Azure provides for interacting with Functions. There is a function.json file that defines the configuration for a Function. The bindings sesiont of this json file tells Azure how the Function should be triggered and how it’s output should be treated. Theres a simple example here:

{

"bindings":[

{

"queueName":"testqueue",

"connection":"MyServiceBusConnection",

"name":"myQueueItem",

"type":"serviceBusTrigger",

"direction":"in"

}

],

"disabled":false

}Depending on SDK you are using (C# here but the Java SDK is very similar) the bindings into a Function can be autogenerated from attributes on the parameters. A future version of the runtime will remove the need for having the bindings section and the just the attributes will be used.

The bindings list here gives a good view as to what is supported for interacting with other Azure products. The HTTP and Timer are probably the two simplest bindings.

In my examples below I’m mostly working with HTTP, Service Bus, CosmosDB and Blob inputs/outputs.

Disclaimer, all these examples are trivial and contrived, none even resemble a prototype.

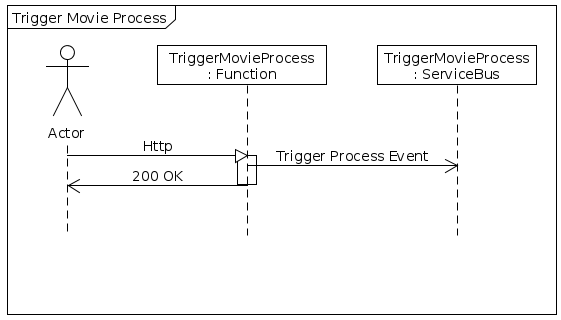

Trigger Movie Process

.

The first Azure Function is triggered by a HTTP call and sends an event to Service Bus when the function is triggered.

[FunctionName("TriggerMovieProcess")]

public static IActionResult Run(

[HttpTrigger(AuthorizationLevel.Anonymous, "get", Route = null)]HttpRequest req,

[ServiceBus("TriggerMovieProcessQueue", Connection = "QueueConnectionString")] ICollector<string> outputServiceBus,

TraceWriter log

)Input

The HTTPTrigger attribute on the req param tells Azure that this function should be exposed as a HTTP endpoint, the HttpRequest is available in the req object, allowing you full access to headers, form data, etc.

Output

The ServiceBus attribute allows you to add messages to the Service Bus queue defined by the name and connection string in the attribute properties.

To get the service bus binding you need the following nuget package

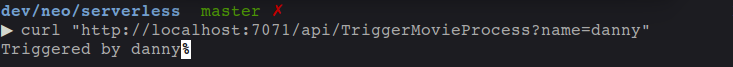

string name = req.Query["name"];

outputServiceBus.Add(JsonConvert.SerializeObject(

new StartMovieProcess

{

TriggeredBy = name,

TriggeredOn = DateTime.UtcNow.ToString()

}));

return name != null

? (ActionResult)new OkObjectResult($"Triggered by {name}")

: new BadRequestObjectResult("Send a name to trigger");I take a name from the query string and populate a StartMovieProcess object that I add to the queue.

The method returns a Http response to sattisfy the http request.

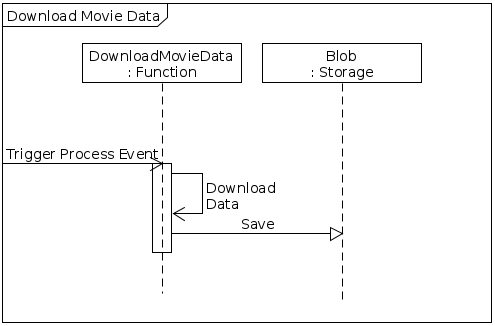

Download movie data

The second Function in the pipeline is triggered by any message in the TriggerMovieProcessQueue.

[FunctionName("DownloadMovieData")]

public static async Task Run(

[ServiceBusTrigger("TriggerMovieProcessQueue", Connection = "QueueConnectionString")]string triggerEventJson,

[BlobAttribute("moviedemo/%moviedata_file_name%", FileAccess.Write)] ICloudBlob blob,

TraceWriter log)The ServiceBusTrigger Attribute tells Azure to invoke this function whenever messages are sent to the queue configured by the properties. The triggerEventJson will contain the data from the message. I could probably have used a strongly typed domain object here instead of flattened json but I haven’t tried it.

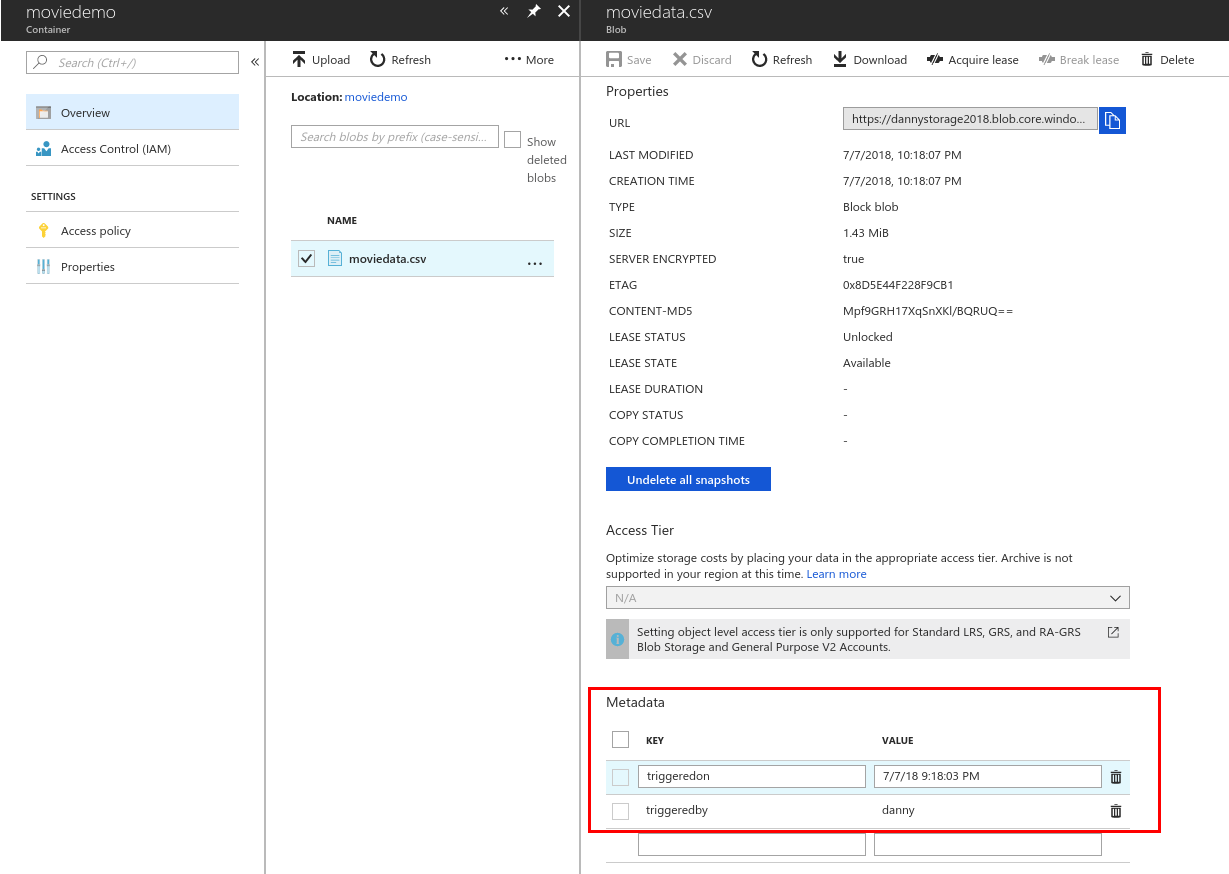

The output here is going to be a blob that I upload a file to.

StartMovieProcess triggerEvent = JsonConvert.DeserializeObject<StartMovieProcess>(triggerEventJson);

blob.Metadata.Add("TriggeredBy",triggerEvent.TriggeredBy);

blob.Metadata.Add("TriggeredOn",triggerEvent.TriggeredOn);

var uri = "https://github.com/yash91sharma/IMDB-Movie-Dataset-Analysis/blob/master/movie_metadata.csv?raw=true";

using (var httpClient = new HttpClient())

using (var responseStream = httpClient.GetStreamAsync(new Uri(uri)))

{

await blob.UploadFromStreamAsync(responseStream.Result);

}

return;In the snippet above I grab the data from a CSV hosted on github and upload it to the blob on Azure Storage.

I added a couple of Metadata values to the blob form the message just to show how easy it is to push these attributes with the blob.

Once the file is uploaded this second function ends.

Setup for local development

If you are using Visual Studio you benefit from the tight developer experience that MS offer, in this article I’ll be using VS Code on Ubuntu.

The Azure Function extension for VSCode is definitely worth looking into.

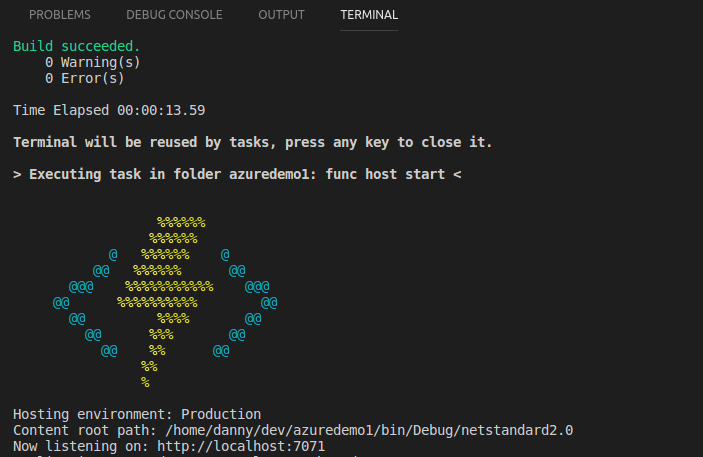

To run the functions locally you will need the sdk nuget package and the Azure Functions Core Tools which offers a local runtime for Functions.

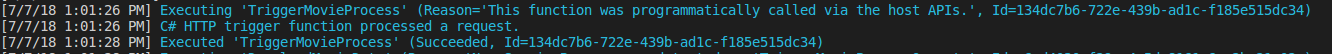

Running the Functions

Running the functions on the local Function host is the same as running any other application once you have the prerequisites above setup.

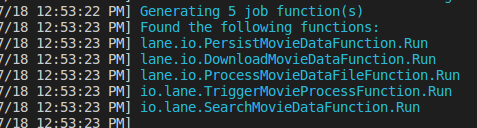

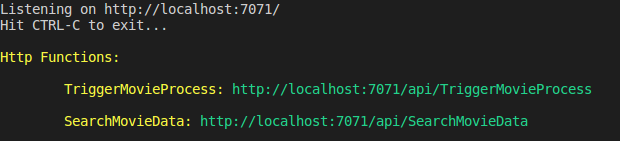

The runtime gives some good logs around which Functions have been detected:

URLs HTTP triggered Functions can be invoked from:

That’s all I’m going to cover in this part, in the next article I’ll look at Functions that process the blob and Write to CosmosDB